The simplest possible Bayesian model

2013.04.16 Leave a comment

MathJax.Hub.Config({

extensions: [“tex2jax.js”],

jax: [“input/TeX”, “output/HTML-CSS”],

tex2jax: {

inlineMath: [ [‘$’,’$’], [“\\(“,”\\)”] ],

displayMath: [ [‘$$’,’$$’], [“\\[“,”\\]”] ],

processEscapes: true

},

“HTML-CSS”: { availableFonts: [“TeX”] }

});

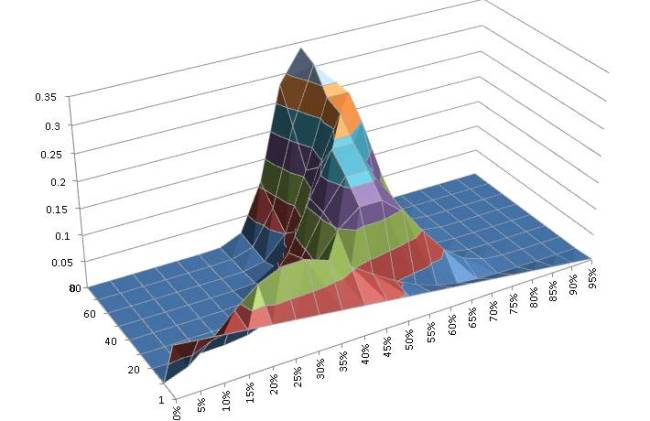

In chapter 5, Kruschke goes through the process of using Bayes’ rule for updating belief in the ‘lopsidedness’ of a coin. Instead of using R I decided to try implementing the model in Excel.

The Model

Coin tosses are IID with a distribution, thus the probability of observing

of which

are heads is

Using Bayes’ rule we get

Note that if is already of the form

where the denominator is a normalizing constant then .

Excel implementation

This is very straight-forward using the BETADIST function, which is cumulative, so we divide the unit interval into equally spaced subintervals, calculate the probability of being in the interval and update on the basis of the new observations.

Here’s the result.

Recent Comments